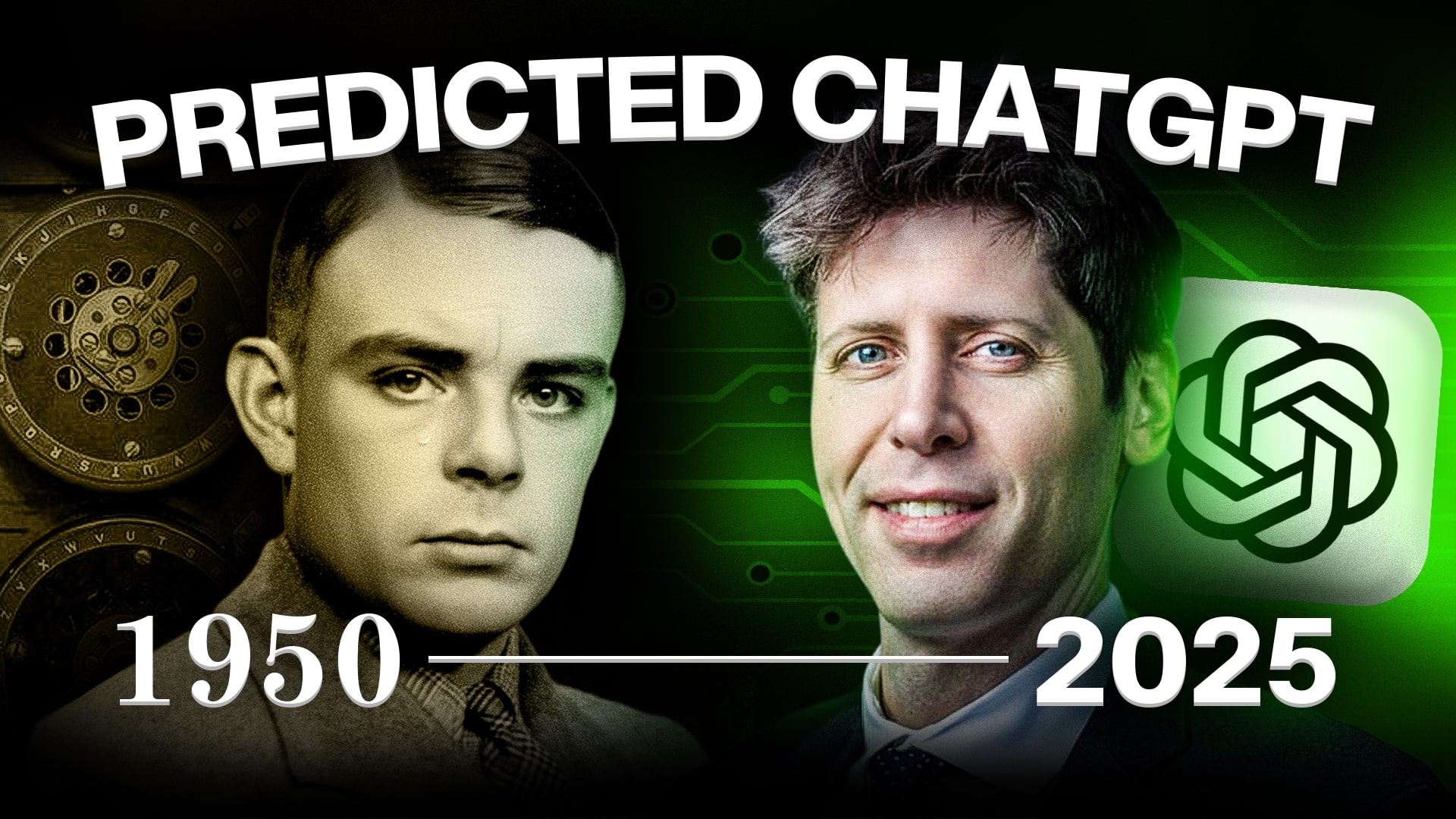

A picture of Alan Turing and Sam Altman

The origins of AI: They predicted ChatGPT in 1950 and everyone laughed

Artificial Intelligence didn’t start with ChatGPT. It began over 70 years ago, when a few visionaries made a prediction so audacious, they were practically laughed out of the room. From Cold War experiments to billion-dollar breakthroughs, the story of AI is full of ambition, failure, and shocking comebacks.

This isn’t a story about a sudden revolution. It’s the story of a forgotten dream, an impossible idea that survived decades of ridicule and failure to become the technology that is now reshaping our world.

How it began

It all started in 1950. The world is rebuilding, computers are the size of living rooms, and their most impressive trick is calculating artillery tables. In this environment, the British mathematician Alan Turing posed a radical, almost childlike question: “Can machines think?”.

Watch our full video on AI’s origins.

He proposed what became known as the Turing Test—a thought experiment in which a human judge tries to distinguish between a person and a machine through a typed conversation.

If a computer could hold a conversation indistinguishable from a human, he argued, we might have to call it intelligent. It was a bold idea, pure fantasy to the establishment of the day. But for a handful of dreamers, Turing’s question wasn’t absurd. It was a challenge.

Six years later, that spark became a fire. In the summer of 1956, a group of brilliant and wildly optimistic researchers gathered at Dartmouth College for an eight-week brainstorming session known as The Dartmouth Workshop. Organizers such as John McCarthy and Marvin Minsky gave this new discipline a name: Artificial Intelligence.

Their optimism was boundless. Their stated goal was to create machines that could use language, form abstract ideas, and even improve themselves. Some, like Herbert Simon, predicted that ‘machines will be capable, within twenty years, of doing any work a man can do.’ They genuinely believed a truly intelligent machine could be built within a single generation.”

The Dartmouth Workshop is now widely regarded as the founding event of the field of artificial intelligence.

In the 1960s and ’70s, AI took its first practical steps. Programs like ELIZA, a chatbot that mimicked a psychotherapist by rephrasing your own sentences back at you, gave a glimpse of the future. Medical systems like MYCIN attempted to assist doctors in diagnosing infections.

These were called expert systems—rule-based programs that followed a strict ‘if this, then that’ logic. They worked, but only in narrow domains. The moment you went off-script, these brittle systems shattered. They were clever tricks, but they weren’t truly smart.

By the 1970s, reality set in. The grand promises from Dartmouth looked like embarrassing failures. Computers just weren’t powerful enough, and understanding the simple common-sense of a child proved incredibly hard.

As the promised breakthroughs never showed up, funding dried up. The dream of AI hit a deep freeze known as the AI Winter. A critical blow came from reports that declared AI had failed to deliver on its hype, leading to massive budget cuts.

Researchers had overpromised, governments had lost patience, and for many, AI had become a dirty word. But beneath the surface, a few scientists kept the dream alive, quietly experimenting.

Hope restored

In the 1980s, a breakthrough returned hope. A learning method called backpropagation, championed by “the Godfather of AI” Geoffrey Hinton, made neural networks practical again. Loosely inspired by the human brain, these systems could finally learn from data instead of just following rigid rules.

Progress was slow, but the seeds for a revolution were planted. Then, in 1997, IBM’s Deep Blue supercomputer shocked the world by defeating world chess champion Garry Kasparov. It was proof that computers could rival human experts, at least in specific, complex tasks.

Fast forward to the 2010s. Two forces collided with explosive results: the massive troves of data available on the internet, and the incredible processing power of Graphics Processing Units, or GPUs, which had been perfected for the video game industry.

Suddenly, deep learning wasn’t just theory—it worked. In 2012, a neural network from Geoffrey Hinton’s team won the ImageNet competition by a landslide, igniting the deep learning revolution. AI was officially back, soon powering voice assistants, facial recognition, and the first self-driving cars.

The final piece of the puzzle fell into place in 2017. Researchers at Google published a landmark paper introducing the Transformer, a new neural network architecture with a simple slogan: “Attention Is All You Need.” Transformers made it possible to train enormous language models with incredible accuracy and an understanding of context like never before.

OpenAI’s GPT series followed—GPT-2 shocked experts in 2019, GPT-3 amazed the world in 2020, and then in November 2022, a fine-tuned version called ChatGPT was released to the public. It became a household name, reaching 100 million users faster than any app in history.

Watch our full video on AI’s origins.

The age of AI is here

Today, AI writes text, generates images, translates languages, and drives cars. The visionaries of the 1950s weren’t wrong; they were just early. But challenges remain: bias in algorithms, the threat of job disruption, and the ultimate question of control.

From Alan Turing’s provocative question to ChatGPT in your pocket, the journey has been anything but a straight line. Now, we live in the world they predicted, and we must grapple with the question: are we building a tool—or a new kind of intelligence? The future of AI may very well define our century.

How do you rate this article?

Subscribe to our YouTube channel for crypto market insights and educational videos.

Join our Socials

Briefly, clearly and without noise – get the most important crypto news and market insights first.

Most Read Today

Peter Schiff Warns of a U.S. Dollar Collapse Far Worse Than 2008

2Samsung crushes Apple with over 700 million more smartphones shipped in a decade

3Dubai Insurance Launches Crypto Wallet for Premium Payments & Claims

4XRP Whales Buy The Dip While Price Goes Nowhere

5Luxury Meets Hash Power: This $40K Watch Actually Mines Bitcoin

Latest

Most Read Today

MOST ENGAGING

Also read

Similar stories you might like.